Requests Introduction

- 第三方的HTTP客户端库,官网| Doc

支持

HTTP连接保持和连接池,支持使用cookie保持会话,文件上传,自动确定响应内容的编码,国际化的URL,POST数据自动编码等vs.

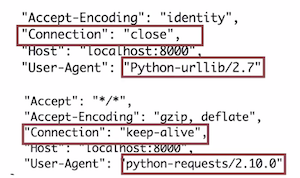

urllib:urllib,urllib2,urllib3是python原生类库urllib与urllib2是两个相互独立的模块(在python3中urllib2被改为urllib.request)requests库使用了urllib3,支持连接保持(eg:多次请求重复使用一个socket),更方便

安装

pip install requests使用

import requests response=requests.get('http://www.baidu.com') print(type(response)) print(response.status_code,response.reason) print(response.encoding,response.apparent_encoding) print(response.request.headers) print(response.headers) print(response.content)注:

Requests默认的传输适配器使用阻塞IO,Response.content属性会阻塞,直到整个响应下载完成(数据流功能允许每次接受少量的一部分响应,但依然是阻塞式的)- 非阻塞可以考虑其他异步框架,例如

grequests,requests-futures

Requests 基础对象和方法

Request 对象

requests.request(method,url,**kwargs)构造一个请求,支撑以下各方法的基础方法(method:对应get/put/post等7种)requests.get(url,params=None,**kwargs)requests.head(url,**kwargs)requests.post(url,data=None,json=None,**kwargs)requests.put/patch(url,data=None,**kwargs)requests.delete(url,**kwargs)- 方法参数:

urlparams: 作为参数增加到url中 (字典或字节流格式)data: 作为Request的内容 (字典、字节序列或文件)json: 作为Request的内容 (JSON格式的数据)headers: HTTP定制头 (字典)cookies: Request中的cookie (字典或CookieJar)auth: 支持HTTP认证功能 (元组)files: 传输文件 (字典类型)timeout: 设定超时时间(秒为单位),默认为None,即一直等待proxies: 设定访问代理服务器,可以增加登录认证(字典类型)allow_redirects: 重定向开关 (True/False,默认为True)stream: 获取内容立即下载开关 (True/False)- False(默认): 表示立即开始下载文件并存放到内存当中(若文件过大就会导致内存不足的情况)

- True: 推迟下载响应体直到访问 Response.content 属性(请求连接不会被关闭直到读取所有数据或者调用

Response.close,使用with语句发送请求,这样可以保证请求一定会被关闭)

verify: 认证SSL证书开关 (True/False,默认为True)cert: 本地SSL证书路径

Response 对象

- 类:

<class 'requests.models.Response'> - 状态

response.status_coderesponse.reason

- body

response.raw(原始响应内容urllib3.response.HTTPResponse, raw.read(),need setstream=Truewhen request)response.content(二进制形式)response.text(字符串形式,根据encoding显示网页内容)response.json()(JSON格式,字典类型)

- header

response.headersrequest.headers

- 编码

response.encoding(从HTTP header中猜测的响应内容编码方式)resposne.apparent_encoding(encoding的备选,从网页内容中分析出的响应内容编码方式)

response.raise_for_status()- 在方法内部判断是否等于

200,不是则抛出requests.HTTPError异常 - 注:不需要增加额外的

if语句,该语句便于利用try except进行异常处理

- 在方法内部判断是否等于

Exception 对象

requests.HTTPError: HTTP错误异常requests.URLRequired: URL缺失异常requests.Timeout: 请求URL超时,产生超时异常requests.ConnectTimeout: 连接远程服务器超时异常requests.ConnectionError: 网络连接错误异常,如DNS查询失败、拒绝连接等requests.TooManyRedirects超过最大重定向次数,产生重定向异常

Requests 基础示例

Visit http://httpbin.org/

requests.get

>>> r=requests.get('http://httpbin.org/get')

>>> type(r)

<class 'requests.models.Response'>

>>> r.status_code,r.reason

(200,'OK')

>>> r.encoding,r.apparent_encoding

(None, 'ascii')

>>> r.headers

{'Access-Control-Allow-Credentials': 'true', 'Access-Control-Allow-Origin': '*', 'Content-Encoding': 'gzip', 'Content-Type': 'application/json', 'Date': 'Thu, 21 Mar 2019 16:40:42 GMT', 'Server': 'nginx', 'Content-Length': '184', 'Connection': 'keep-alive'}

>>> r.request.headers

{'User-Agent': 'python-requests/2.21.0', 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*', 'Connection': 'keep-alive'}

>>> r.json()

{

"args": {},

"headers": {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Host": "httpbin.org",

"User-Agent": "python-requests/2.21.0"

},

...

}

requests.head

>>> r=requests.head('http://httpbin.org/get')

>>> r.text

''

>>> r.headers

{'Access-Control-Allow-Credentials': 'true', 'Access-Control-Allow-Origin': '*', 'Content-Encoding': 'gzip', 'Content-Type': 'application/json', 'Date': 'Tue, 19 Mar 2019 13:16:24 GMT', 'Server': 'nginx', 'Connection': 'keep-alive'}

requests.post+data/json

# `data={...}`

# 字典,自动编码为form(表单)

# content-type: application/x-www-form-urlencoded

# request body: key1=value1&key2=value2

>>> record={'key1':'value1','key2':'value2'}

>>> r=requests.post('http://httpbin.org/post',data=record)

>>> r.request.headers['content-type']

application/x-www-form-urlencoded

>>> r.json()

{'args': {}, 'data': '', 'files': {}, 'form': {'key1': 'value1', 'key2': 'value2'}, 'headers': {'Accept': '*/*', 'Accept-Encoding': 'gzip, deflate', 'Content-Length': '23', 'Content-Type': 'application/x-www-form-urlencoded', 'Host': 'httpbin.org', 'User-Agent': 'python-requests/2.21.0'}, 'json': None, ...}

# `data='...'`

# 字符串,自动编码为data

# request body: 'ABC123'

>>> record="ABC123"

>>> r=requests.post('http://httpbin.org/post',data=record)

>>> r.request.headers.get('content-type',None)

None

>>> r.json()

{'args': {}, 'data': 'ABC123', 'files': {}, 'form': {}, 'headers': {'Accept': '*/*', 'Accept-Encoding': 'gzip, deflate', 'Content-Length': '6', 'Host': 'httpbin.org', 'User-Agent': 'python-requests/2.21.0'}, 'json': None, ...}

# `json={...}`

# 字典

# content-type: application/json

# request body: {'key1': 'value1', 'key2': 'value2'}

>>> record={'key1':'value1','key2':'value2'}

>>> r = requests.request('POST', 'http://httpbin.org/post', json=record)

>>> r.request.headers['Content-Type']

application/json

>>> r.json()

{'args': {}, 'data': '{"key1": "value1", "key2": "value2"}', 'files': {}, 'form': {}, 'headers': {'Accept': '*/*', 'Accept-Encoding': 'gzip, deflate', 'Content-Length': '36', 'Content-Type': 'application/json', 'Host': 'httpbin.org', 'User-Agent': 'python-requests/2.21.0'}, 'json': {'key1': 'value1', 'key2': 'value2'}, ...}

kwargs: params

>>> kv = {'key1': 'value1', 'key2': 'value2'}

>>> r = requests.request('GET', 'http://httpbin.org/get', params=kv)

>>> r.url

http://httpbin.org/get?key1=value1&key2=value2

>>> r.json()

{'args': {'key1': 'value1', 'key2': 'value2'}, 'headers': {'Accept': '*/*', 'Accept-Encoding': 'gzip, deflate', 'Host': 'httpbin.org', 'User-Agent': 'python-requests/2.21.0'}, 'origin': '117.83.222.100, 117.83.222.100', 'url': 'https://httpbin.org/get?key1=value1&key2=value2'}

kwargs: auth

import requests

Endpoint="http://httpbin.org"

# 1. basic auth

r=requests.request('GET',Endpoint+'/basic-auth/Tom/Tom111')

print(r.status_code,r.reason)

# 401 UNAUTHORIZED

r=requests.request('GET',Endpoint+'/basic-auth/Tom/Tom111',auth=('Tom','Tom123'))

print(r.status_code,r.reason)

# 401 UNAUTHORIZED

r=requests.request('GET',Endpoint+'/basic-auth/Tom/Tom123',auth=('Tom','Tom123'))

print(r.status_code,r.reason)

print(r.request.headers)

print(r.text)

# 200 OK

# {'User-Agent': 'python-requests/2.21.0', 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*', 'Connection': 'keep-alive', 'Authorization': 'Basic VG9tOlRvbTEyMw=='}

# {

# "authenticated": true,

# "user": "Tom"

# }

print(base64.b64decode('VG9tOlRvbTEyMw=='))

print('--------------------------')

# 2. oauth

r=requests.request('GET',Endpoint+'/bearer')

print(r.status_code,r.reason) # 401 UNAUTHORIZED

print(r.headers) # Note: 'WWW-Authenticate': 'Bearer'

r=requests.request('GET',Endpoint+'/bearer',headers={'Authorization':'Bearer 1234567'})

print(r.status_code,r.reason) # 200 OK

print(r.headers)

print('--------------------------')

# 3. advance: 自定义身份验证(继承requests.auth.AuthBase)

from requests.auth import AuthBase

class MyAuth(AuthBase):

def __init__(self,authType,token):

self.authType=authType

self.token=token

def __call__(self,req):

req.headers['Authorization']=' '.join([self.authType,self.token])

return req

r=requests.request('GET',Endpoint+'/bearer',auth=MyAuth('Bearer','123456'))

print(r.status_code,r.reason) # 200 OK

print("Request Headers:",r.request.headers)

print("Response Headers:",r.headers)

print("Response Text:",r.text)

kwargs: cookies

>>> r=requests.request('GET','http://httpbin.org/cookies/set?freedom=test123')

>>> r.cookies

>>> r.request.headers

{'User-Agent': 'python-requests/2.21.0', 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*', 'Connection': 'keep-alive', 'Cookie': 'freedom=test123'}

>>> cookies = dict(cookies_are='working') # {'cookies_are':'working'}

>>> r = requests.get('http://httpbin.org/cookies', cookies=cookies)

>>> r.text

'{"cookies": {"cookies_are": "working"}}'

>>> jar = requests.cookies.RequestsCookieJar()

>>> jar.set('tasty_cookie', 'yum', domain='httpbin.org', path='/cookies')

>>> jar.set('gross_cookie', 'blech', domain='httpbin.org', path='/get')

>>> r = requests.get('http://httpbin.org/cookies', cookies=jar)

>>> r.text

'{"cookies": {"tasty_cookie": "yum"}}'

kwargs: timeout

def timeout_request(url,timeout):

try:

resp=requests.get(url,timeout=timeout)

resp.raise_for_status()

except requests.Timeout or requests.HTTPError as e:

print(e)

except Exception as e:

print("unknow exception:",e)

else:

print(resp.text)

print(resp.status_code)

timeout_request('http://httpbin.org/get',0.1)

# HTTPConnectionPool(host='httpbin.org', port=80): Max retries exceeded with url: /get (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x1025d9400>, 'Connection to httpbin.org timed out. (connect timeout=0.1)'))

kwargs: proxies

>>> pxs = { 'http': 'http://user:pass@10.10.10.1:1234' 'https': 'https://10.10.10.1:4321' }

>>> r = requests.request('GET', 'http://www.baidu.com', proxies=pxs)

kwargs: files

f={'image': open('黑洞1.jpg', 'rb')}

r = requests.post(Endpoint+'/post', files=f)

print(r.status_code,r.reason)

print(r.headers)

print(r.text[100:200])

print('--------------------------')

# POST Multiple Multipart-Encoded Files

multiple_files = [

('images', ('黑洞1.jpg', open('黑洞1.jpg', 'rb'), 'image/jpg')),

('images', ('极光1.jpg', open('极光1.jpg', 'rb'), 'image/jpg'))

]

r = requests.post(Endpoint+'/post', files=multiple_files)

print(r.status_code,r.reason)

print(r.headers)

print(r.text[100:200])

print('--------------------------')

kwargs: stream

with requests.get(Endpoint+"/stream/3",stream=True) as r:

print(r.status_code,r.reason)

contentLength=int(r.headers.get('content-length',0))

print("content-length:",contentLength)

# 此时仅有响应头被下载下来了,连接保持打开状态,因此允许我们根据条件获取内容

if contentLength<100:

print(r.content)

else:

print('read line by line')

lines=r.iter_lines() # iter_content 一块一块的下载遍历内容

for line in lines:

if line:

print(line)

print('Done')

print('--------------------------')

Exception

import requests

def do_request(url):

try:

r=requests.get(url,timeout=0.1)

r.raise_for_status()

r.encoding=r.apparent_encoding

except requests.Timeout or requests.HTTPError as e:

print(e)

except Exception as e:

print("Request Error:",e)

else:

print(r.text)

print(r.status_code)

return r

if __name__=='__main__':

do_request("http://www.baidu.com")

Requests 进阶使用

Event hooks

def get_key_info(response,*args,**kwargs):

print("callback:content-type",response.headers['Content-Type'])

r=requests.get(Endpoint+'/get',hooks=dict(response=get_key_info))

print(r.status_code,r.reason)

# callback:content-type application/json

# 200 OK

Session

跨请求保持某些参数

# 在同一个 Session 实例发出的所有请求之间保持 cookie, 期间使用 urllib3 的 connection pooling 功能 s = requests.Session() r=s.get(Endpoint+'/cookies/set/mycookie/123456') print("set cookies",r.status_code,r.reason) # set cookies 200 OK r = s.get(Endpoint+"/cookies") print("get cookies",r.status_code,r.reason) # get cookies 200 OK print(r.text) # { # "cookies": { # "mycookie": "123456" # } # }为请求方法提供缺省数据

# 通过为会话对象的属性提供数据来实现(注:方法层的参数覆盖会会话的参数) s = requests.Session() s.auth = ('user', 'pass') s.headers.update({'x-test': 'true'}) # both 'x-test' and 'x-test2' are sent r=s.get(Endpoint+'/headers', headers={'x-test2': 'true'}) print(r.request.headers) # {'User-Agent': 'python-requests/2.21.0', 'Accept-Encoding': 'gzip, deflate', 'Accept': '*/*', 'Connection': 'keep-alive', 'x-test': 'true', 'x-test2': 'true', 'Authorization': 'Basic dXNlcjpwYXNz'}用作前后文管理器

with requests.Session() as s: # 这样能确保 with 区块退出后会话能被关闭,即使发生了异常也一样 s.get('http://httpbin.org/cookies/set/mycookie/Test123') r = s.get(Endpoint+"/cookies") print("set cookies",r.status_code,r.reason) print(r.text) # { # "cookies": { # "mycookie": "Test123" # } # } print("out with:") r = s.get(Endpoint+"/cookies") print("get cookies",r.status_code,r.reason) print(r.text) # { # "cookies": { # "mycookie": "Test123" # } # }

Prepared Request

# 可在发送请求前,对body/header等做一些额外处理

s=Session()

req=Request('GET',Endpoint+'/get',headers={'User-Agent':'fake1.0.0'})

prepared=req.prepare() # 要获取一个带有状态的 PreparedRequest需使用`s.prepare_request(req)`

# could do something with prepared.body/prepared.headers here

# ...

resp=s.send(prepared,timeout=3)

print(resp.status_code,resp.reason)

print("request headers:",resp.request.headers)

# {'User-Agent': 'fake1.0.0'}

print("response headers:",resp.headers)

# {'Access-Control-Allow-Credentials': 'true', 'Access-Control-Allow-Origin': '*', 'Content-Type': 'application/json', 'Date': 'Thu, 21 Mar 2019 15:47:30 GMT', 'Server': 'nginx', 'Content-Length': '216', 'Connection': 'keep-alive'}

print(resp.text)

# {

# "args": {},

# "headers": {

# "Accept-Encoding": "identity",

# "Host": "httpbin.org",

# "User-Agent": "fake1.0.0"

# },

# "origin": "117.83.222.100, 117.83.222.100",

# "url": "https://httpbin.org/get"

# }

Chunk-Encoded Requests

# 分块传输,使用生成器或任意没有具体长度的迭代器

def gen():

yield b'hi '

yield b'there! '

yield b'How are you?'

yield b'This is for test 123567890.....!'

yield b'Test ABCDEFG HIGKLMN OPQ RST UVWXYZ.....!'

r=requests.post(Endpoint+'/post', data=gen()) # stream=True

print(r.status_code,r.reason,r.headers['content-length'])

for chunk in r.iter_content(chunk_size=100): # chunk_size=None

if chunk:

print(chunk)

print('done')

Reqeusts 应用示例

Download pic from http://www.nationalgeographic.com.cn

一次性下载(小文件,stream=False)

import requests

import os

def download_small_file(url):

try:

r=requests.get(url)

r.raise_for_status()

print(r.status_code,r.reason)

contentType=r.headers["Content-Type"]

contentLength=int(r.headers.get("Content-Length",0))

print(contentType,contentLength)

except Exception as e:

print(e)

else:

filename=r.url.split('/')[-1]

print('filename:',filename)

target=os.path.join('.',filename)

if os.path.exists(target) and os.path.getsize(target):

print('Exist -- Skip download!')

else:

with open(target,'wb') as fd:

fd.write(r.content)

print('done!')

if __name__ == '__main__':

import time

print('start')

start = time.time()

url="http://image.nationalgeographic.com.cn/2017/0211/20170211061910157.jpg"

download_small_file(url)

end=time.time()

print('Runs %0.2f seconds.' % (end - start))

print('end')

流式分块下载(大文件,stream=True)

import requests

import os

def download_large_file(url):

try:

r=requests.get(url,stream=True)

r.raise_for_status()

print(r.status_code,r.reason)

contentType=r.headers["Content-Type"]

contentLength=int(r.headers.get("Content-Length",0))

print(contentType,contentLength)

except Exception as e:

print(e)

else:

filename=r.url.split('/')[-1]

print('filename:',filename)

target=os.path.join('.',filename)

if os.path.exists(target) and os.path.getsize(target):

print('Exist -- Skip download!')

else:

with open(target,'wb') as fd:

for chunk in r.iter_content(chunk_size=10240):

if chunk:

fd.write(chunk)

print('download:',len(chunk))

finally:

r.close()

print('close')

if __name__ == '__main__':

import time

print('start')

start = time.time()

url="http://image.nationalgeographic.com.cn/2017/0211/20170211061910157.jpg"

download_large_file(url)

end=time.time()

print('Runs %0.2f seconds.' % (end - start))

print('end')

显示进度条

import requests

import os

def download_with_progress(url):

try:

with requests.get(url,stream=True) as r:

r.raise_for_status()

print(r.status_code,r.reason)

contentType=r.headers["Content-Type"]

contentLength=int(r.headers.get("Content-Length",0))

print(contentType,contentLength)

filename=r.url.split('/')[-1]

print('filename:',filename)

target=os.path.join('.',filename)

if os.path.exists(target) and os.path.getsize(target):

print('Exist -- Skip download!')

else:

chunk_size=1024

progress =ProgressBar(filename, total=content_length,chunk_size=1024,unit="KB")

with open(target,'wb') as fd:

for chunk in r.iter_content(chunk_size=chunk_size):

if chunk:

fd.write(chunk)

#print('download:',len(chunk))

progress.refresh(count=len(chunk))

except Exception as e:

print(e)

print('done')

# ProgressBar

class ProgressBar(object):

def __init__(self,title,total,chunk_size=1024,unit='KB'):

self.title=title

self.total=total

self.chunk_size=chunk_size

self.unit=unit

self.progress=0.0

def __info(self):

return "【%s】%s %.2f%s / %.2f%s" % (self.title,self.status,self.progress/self.chunk_size,self.unit,self.total/self.chunk_size,self.unit)

def refresh(self,progress):

self.progress += progress

self.status="......"

end_str='\r'

if self.total>0 and self.progress>=self.total:

end_str='\n'

self.status='completed'

print(self.__info(),end=end_str)

if __name__ == '__main__':

import time

print('start')

start = time.time()

url="http://image.nationalgeographic.com.cn/2017/0211/20170211061910157.jpg"

download_with_progress(url)

end=time.time()

print('Runs %0.2f seconds.' % (end - start))

print('end')

多任务下载

多进程下载:并行(同时)

import multiprocessing from multiprocessing import Pool # do multiple downloads - multiprocessing def do_multiple_download_multiprocessing(url_list,targetDir): cpu_cnt=multiprocessing.cpu_count() print("系统进程数: %s, Parent Pid: %s" % (cpu_cnt,os.getpid())) p = Pool(cpu_cnt) results=[] for i,url in enumerate(url_list): result=p.apply_async(do_download,args=(i,url,targetDir,False,),callback=print_return) results.append(result) print('Waiting for all subprocesses done...') p.close() p.join() for result in results: print(os.getpid(),result.get()) print('All subprocesses done.') # callback def print_return(result): print(os.getpid(),result)多线程下载:并发(交替)

import threading def do_multiple_downloads_threads(url_list,targetDir): thread_list=[] for i,url in enumerate(url_list): thread=threading.Thread(target=do_download,args=(i,url,targetDir,True,)) thread.start() thread_list.append(thread) print('Waiting for all threads done...') for thread in thread_list: thread.join() print('All threads done.')verify

import requests from bs4 import BeautifulSoup import os,time import re # do download using `requests` def do_download(i,url,targetDir,isPrint=False): headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:60.0) Gecko/20100101 Firefox/60.0' } try: response=requests.get(url,headers=headers,stream=True,verify=False) response.raise_for_status() except Exception as e: print("Occur Exception:",e) else: content_length = int(response.headers.get('Content-Length',0)) filename=str(i)+"."+url.split('/')[-1] print(response.status_code,response.reason,content_length,filename) progressBar=ProgressBar(filename, total=content_length,chunk_size=1024,unit="KB") with open(os.path.join(targetDir,filename),'wb') as fd: for chunk in response.iter_content(chunk_size=1024): if chunk: fd.write(chunk) progressBar.refresh(len(chunk)) if isPrint: print(os.getpid(),threading.current_thread().name,filename,"Done!") return '%s %s %s Done' % (os.getpid(),threading.current_thread().name,filename) # prepare download urls def url_list_crawler(): url="http://m.ngchina.com.cn/travel/photo_galleries/5793.html" response=requests.get(url) print(response.status_code,response.reason,response.encoding,response.apparent_encoding) response.encoding=response.apparent_encoding soup=BeautifulSoup(response.text,'html.parser') #results=soup.select('div#slideBox ul a img') #results=soup.find_all('img') results=soup.select("div.sub_center img[src^='http']") url_list=[ r["src"] for r in results] print("url_list:",len(url_list)) print(url_list) return url_list # main if __name__=='__main__': print('start') targetDir="/Users/cj/space/python/download" url="http://image.ngchina.com.cn/2019/0325/20190325110244384.jpg" url_list=url_list_crawler() start=time.time() # 0 download one file using `requests` do_download("A",url,targetDir) end = time.time() print('Total cost %0.2f seconds.' % (end-start)) start=end # 1 using multiple processings do_multiple_download_multiprocessing(url_list,targetDir) end = time.time() print('Total cost %0.2f seconds.' % (end-start)) start=end # 2 using multiple threads do_multiple_downloads_threads(url_list,targetDir) end = time.time() print('Total cost %0.2f seconds.' % (end-start)) start=end print('end')

aiohttp

Asynchronous HTTP Client/Server for asyncio and Python.

- 支持客户端和HTTP服务器

- 提供异步web服务的库 (

requests是同步阻塞的) - 无需使用Callback Hell即可支持Server/Client WebSockets

- install:

pip install aiohttp

Client Sample

Refer to Client Quickstart

import aiohttp

import asyncio

async def fetch(session, url):

async with session.get(url) as response:

return await response.text()

async def main():

async with aiohttp.ClientSession() as session:

html = await fetch(session, 'http://httpbin.org/headers')

print(html)

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

Server Sample

Refer to Web Server Quickstart

from aiohttp import web

async def handle(request):

name = request.match_info.get('name', "Anonymous")

text = "Hello, " + name

return web.Response(text=text)

app = web.Application()

app.add_routes([web.get('/', handle),

web.get('/{name}', handle)])

web.run_app(app)

应用:协程并发下载文件

单线程 & 异步 & 非阻塞

do download using

aiohttpasync def do_aiohttp_download(session,i,url,targetDir): async with session.get(url) as response: content_length = int(response.headers.get('Content-Length',0)) filename=str(i)+"."+url.split('/')[-1] print(response.status,response.reason,content_length,filename) progressBar=ProgressBar(filename, total=content_length,chunk_size=1024,unit="KB") with open(os.path.join(targetDir,filename),'wb') as fd: while True: chunk=await response.content.read(1024) if not chunk: break; fd.write(chunk) progressBar.refresh(len(chunk)) await response.release() # print(filename,"Done!") return filename # callback def print_async_return(task): print(task.result(),"Done") def print_async_return2(i,task): print(i,":",task.result(),"Done")case1: do one download

async def do_async_download(i,url,targetDir): async with aiohttp.ClientSession() as session: return await do_aiohttp_download(session,i,url,targetDir)case2: do multiple download

# do multiple downloads - asyncio async def do_multiple_downloads_async(url_list,targetDir): async with aiohttp.ClientSession() as session: # tasks=[do_aiohttp_download(session,url,targetDir) for url in url_list] # await asyncio.gather(*tasks) tasks=[] for i,url in enumerate(url_list): task=asyncio.create_task(do_aiohttp_download(session,i,url,targetDir)) # task.add_done_callback(print_async_return) task.add_done_callback(functools.partial(print_async_return2,i)) tasks.append(task) await asyncio.gather(*tasks)verify

import os,time import asyncio import aiohttp import functools import re # prepare download urls def url_list_crawler(): url="http://m.ngchina.com.cn/travel/photo_galleries/5793.html" response=requests.get(url) print(response.status_code,response.reason,response.encoding,response.apparent_encoding) response.encoding=response.apparent_encoding soup=BeautifulSoup(response.text,'html.parser') #results=soup.select('div#slideBox ul a img') #results=soup.find_all('img') results=soup.select("div.sub_center img[src^='http']") url_list=[ r["src"] for r in results] print("url_list:",len(url_list)) print(url_list) return url_list # main if __name__=='__main__': print('start') targetDir="/Users/cj/space/python/download" url="http://image.ngchina.com.cn/2019/0325/20190325110244384.jpg" url_list=url_list_crawler() start=time.time() # 1. download one file using `aiohttp` loop=asyncio.get_event_loop() loop.run_until_complete(do_async_download("A",url,targetDir)) loop.close() end = time.time() print('Total cost %0.2f seconds.' % (end-start)) start=end # 2. download many files using `aiohttp` loop=asyncio.get_event_loop() loop.run_until_complete(do_multiple_downloads_async(url_list,targetDir)) loop.close() end = time.time() print('Total cost %0.2f seconds.' % (end-start)) start=end print('end')